What is Neural Machine Translation

This article offers you an insight into the features, pros and cons of Neural Machine Translation (NMT)

We live in the 21st century, and as technology continues to progress, we simply cannot deny how utterly fascinating the topic of artificial intelligence is and how widespread it has become in so many modern fields of application today.

One such relevant example is the Translation and Localization industry, where machine translation is being consistently iterated with the purpose of maximizing linguistic service output and turnaround times for businesses worldwide.

The steady global trade and the ever-growing need for international communication between markets and cultures create the propitious background for enterprises to desire the integration of Neural Machine Translation systems (NMTs), with the aim of lowering their costs, while sky-rocketing their productivity and volume coverage.

That being said, let’s delve deeper into the concept of NMT and look at its key features, workflow and applications, potential benefits and limitations, and draw our conclusions whether it can truly be an asset for the industry or just an idealistic translation and localization solution.

What is Neural Machine Translation?

NMT or "Neural Machine Translation" represents a cutting-edge AI-powered translation service which involves an end-to-end approach for automated translation, with the goal of overcoming common weaknesses of classical translation methods.

NMT is a state-of-the-art approach, taking advantage of deep learning methods and resulting in a cleaner translation output in comparison with classic Machine Translation solutions (common examples are DeepL and Mirai Translate).

It represents the latest form of MT (Machine Translation) that utilizes a neural network closely based on the human brain, allowing it to categorize data into various groups and layers.

The core of NMT consists of existing bilingual databases and automated learning processes that contribute to the constant improvement of its efficiency, speed and quality.

How Neural Machine Translation differs from Phrase-Based Machine Translation (PBMT)

There are three main approaches to machine translation: rule-based, statistical and neural.

-

Rule-based Machine Translation:

(RBMT or simply known as the "classical approach" of MT) is based on linguistic information concerning source and target languages retrieved from unilingual, bilingual or multilingual dictionaries and grammars covering the main semantic, morphological and syntactic regularities of each language, respectively. -

Statistical Machine Translation:

(SMT) is a form of machine translation paradigm involving translations generated by statistical models and parameters derived from the strict analysis of bilingual text corpora. -

Phrase-Based Machine Translation:

(PBMT) is a form of statistical machine translation, involving predictive algorithms that teach a computer how a specific text should be translated. It sources its data from various previously translated bilingual texts which highly influence the final quality.

The main difference that separates NMT from rule-based and statistical machine translation methods is that it uses vector representations of words, which makes the structures of NMT simpler than previous MT models and systems. Vector representation is a word embedding process mainly used to find word analogies and perform sentiment analyses on the tone of a piece of writing.

Potential Benefits of NMT

According to a detailed technical report entitled "Google’s Neural Machine Translation System: Bridging the Gap between Human and Machine Translation" released in 2016, "the strength of NMT lies in its ability to learn directly, in an end-to-end fashion, the mapping from input text to associated output text."

In comparison to conventional rule-based and phrase-based Machine Translation systems, NMT is also endowed with the inherent capacity to linguistically adapt source to target, also mimicking external alignment models and breaking words into smaller units.

-

Automation:

Over time the AI has been constantly upgraded with features that enable it to learn linguistic rules from statistical models using algorithms.

NMT operating models represent a superior version of phrase-based models, processing parallel corpora of information and acquiring a wider range of translation parameters from them.

Neural Machine Translation systems consequently generate translation outputs of increased accuracy in the case of generic texts, not overly endowed with technical or specific terminology, potentially reducing the time needed for post-editing by up to 25%. -

A certain degree of applicability for under-resourced languages:

Neural Machine Translation systems can prove beneficial in some low-resourced language cases, as they can "bridge the linguistic gaps" through effective introductions of local dependencies and word alignments which adapt and learn sentence reordering, thus adding up to the corpora of the respective desired language combinations and ensuring cross-lingual output.

-

Better lexical fluency than PBMT:

NMT presents a higher inter-system variability in comparison to phrase-based systems, resulting in more fluent and more inflectional translation outputs.

According to Luisa Bentivogli’s findings in "Neural versus Phrase-Based Machine Translation Quality: a Case Study" (2016), NMT also outperforms PBMT in terms of word reordering, morphology, syntax and agreements in comparison to earlier, purely phrase-based approaches to machine translation. -

Compact memory usage:

NMT functions in a fairly compact way, utilizing only a fraction of the memory space required by SMT models.

-

End-to-end approach:

All elements which ensure the functionality of NMT are trained jointly in an end-to-end approach, maximizing target translation outputs and turnaround times in comparison to other classic MT approaches.

Compared to PBMT, which has a separate language, translation and reordering model, NMT relies solely on a single sequence model that predicts one word at a time.

The sequence modelling is done using the ‘Encoder-Decoder’ approach where an encoder neural network reads and encodes a source sentence into a fixed-length vector and a decoder outputs a translation.

Limitations of NMT

However, NMT is definitely not a silver bullet and the wide range of limitations in comparison to a standard linguist-based TEP process is undeniable and subject to continuous reassessment.

Lexical and contextual limitations

It has been found that although NMT is the most developed form of machine translation in terms of contextual and lexical grasp, it still deploys considerably limited lexical variety and density in comparison to human-driven translation.

The "lack of lexical robustness" in NMT models results in a limited applicability in many domains or high-profile business sectors requiring specific terminology, which cannot tolerate such levels of instability in terms of lexical accuracy and versatility.

One such specific example is this excerpt from a legal text also mentioned in one of Google’s most recent research articles on the topic of AI: "Robust Neural Machine Translation" (2019) in which we can clearly observe a translation example which should unequivocally convey a final decision in a prosecution case.

Table: Comparison between NMT target output based on altered source input

| GERMAN source | Transformer NMT Target ENGLISH translation |

| Der Sprecher des Untersuchungsausschusses hat angekündigt, vor Gericht zu ziehen, falls sich die geladenen Zeugen weiterhin weigern sollten, eine Aussage zu machen. | The spokesman of the Committee of Inquiry has announced that if the witnesses summoned continue to refuse to testify, he will be brought to court. |

| Der Sprecher des Untersuchungsausschusses hat angekündigt, vor Gericht zu ziehen, falls sich die vorgeladenen Zeugen weiterhin weigern sollten, eine Aussage zu machen. | The investigative committee has announced that he will be brought to justice if the witnesses who have been invited continue to refuse to testify. |

NMT solutions deployed in such specific translation scenarios are highly sensitive to perturbations if the source input is even slightly altered, resulting in error sequences the most common of which are under-translations, over-translations or mistranslations altogether.

In our aforementioned case example, the small change from "geladenen" to "vorgeladenen" introduced in the source input affected the basic output algorithm, altering the target translation negatively and resulting in a mistranslation.

Furthermore, in "Robust Neural Machine Translation with Doubly Adversarial Inputs" (2019), one of the most recent Google AI papers highlighting the vulnerability of NMT to noisy perturbations in the input, another relevant example from a text seemingly of governmental/diplomacy origin was showcased to prove the theory of NMT proclivity to under-translation in case of a slight alteration of the source input.

The example was back translated with an English-Chinese Transformer NMT model and an alternate Chinese-English NMT model, using both back translated data and original parallel data (Cheng, Jiang, Macherey, 2019, Google AI).

Table: Comparison between Transformer NMT and alternate NMT models for input and perturbed input

| Transformer ENG>CHZ model input | 这体现了中俄两国和两国议会间密切(紧密)的友好合作关系 |

| SOURCE | this expressed the relationship of close friendship and cooperation between China and Russia and between our parliaments |

| Transformer CHZ>ENG model back translation | this reflects the close friendship and cooperation between the two countries and the two parliaments. |

| Alternate CZH>ENG MT model back translation | this reflects/embodied the close relations of friendship and cooperation between China and Russia and between their parliaments. |

The input text reads "这体现了中俄两国和两国议会间密切(紧密)的友好合作关系" in Chinese, having as reference the English source “this expressed the relationship of close friendship and cooperation between China and Russia and between our parliaments”.

The Transformer Machine Translation model renders the instance in Chinese with two versions for the word "close" which literally have the same meaning ("密切" and "紧密"), whereas the Chinese to English MT back translation perturbs the meaning resulting in a "noisy", approximate rendering, as follows: "this reflects the close friendship and cooperation between the two countries and the two parliaments" (Vaswani et al. ), whereas the Chinese into English alternate MT back translation retains the key-elements in the reference such as the country names "China and Russia".

The example showcased above proves that machine translation, although automated in rendering the meaning with a certain degree of accuracy based on a source input, still cannot match the linguistic "feel" of human-driven translation in terms of exhibiting higher robustness and consistency.

Format limitations

Another considerable limitation of NMT is its inflexibility towards rendering translations in a wide range of format types. Not all documents and format types are ideal for use with NMT and implementing machine translation improperly can lead to exposing private and confidential data.

This is why it is paramount to work with a specialized Language Service Provider employing state-of-the-art translation security systems, skilled professionals and up-to-date technologies in order to properly manage the workflow.

Expertise limitations

With every great neural network comes great responsibility and this is proven by how the synapses of NMT cannot perform efficiently without the expertise and decision-making power of the human engineers and architects.

NMT requires significant background expertise and experimentation, as any NMT model must be trained beforehand with large quantities of linguistic data and programmed to function in accordance with a wide range of parameters.

NMT systems are also prone to short-term memory losses, more often than not forgetting information gained from prior lexical hits, very similar to those team-building games in which you have to repeat to the other person in line exactly the line the previous person whispered in your ear, the exact rendition of which is hardly the case.

Accuracy limitations

NMT tools are "trained" with a huge corpus of source language segments and their translations for a particular language pair and subject-matter, their output having to be constantly reviewed by professional translators who check the NMTed text against the source and correct it where applicable.

More often than not, NMT translations are unreliable, unpredictable or utterly unintelligible, having no guarantees of accuracy or consistency, which makes it difficult to automatically detect and correct the faulty instances.

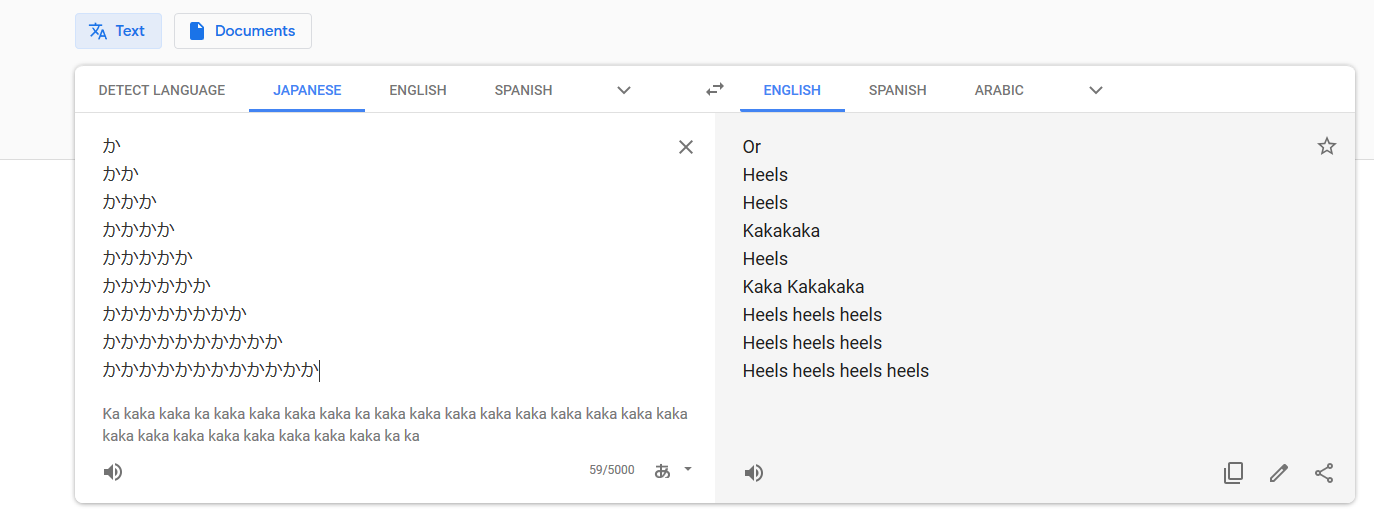

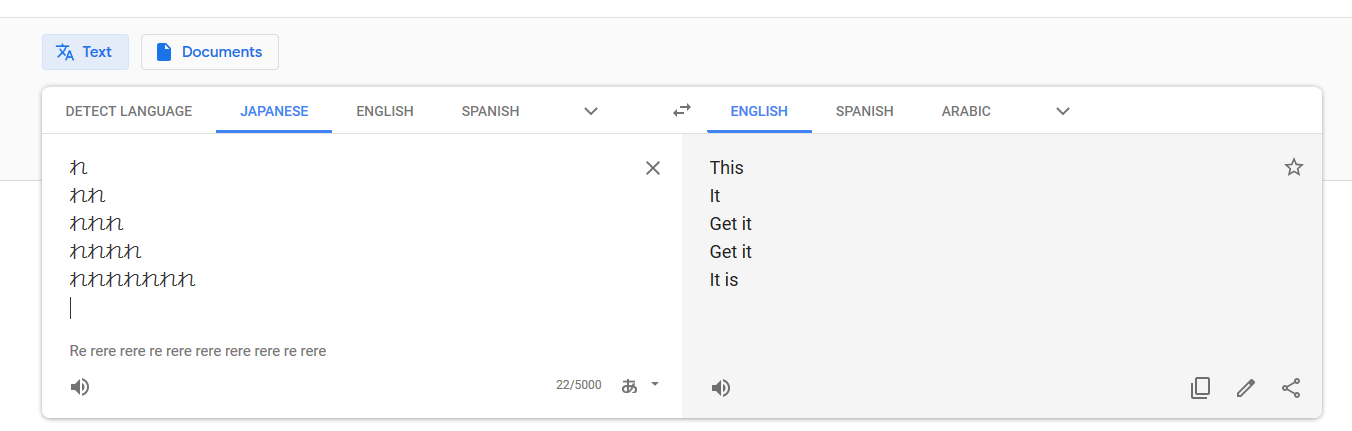

Amusing examples of NMT accuracy fallacies are given below, snapped from a Japanese to English gibberish equivalence of characters performed by Google Translate:

The examples showcase two different instances of repeated Japanese hiragana characters か "ka" and れ "re" which produce drastically different and random equivalents in the English target.

One can be completely oblivious of the source language and still infer that the output is highly nonsensical, inconsistent and ludicrous.

Inherently, any NMT process should involve at least one subject-matter expert in the Machine Translation Post-Editing stage in order to avoid such comical linguistic scenarios.

However, NMT is still a long way from the quality output ensured by three linguists working in the standard human-driven TEP (Translation, Editing, Proofreading) workflow, as three pairs of eyes are always a better and more thorough option than one pair in ensuring top-notch translation quality and consistency.

Conclusions

In this article we looked at the concept of NMT from a multitude of standpoints, observing its key features, workflow and applications, potential benefits and multitude of limitations in order to assess whether it can truly be an asset for the industry or just an idealistic translation and localization solution.

It is undeniable that the trends of future technology will continue to evolve and more and more businesses will seek fast, automated and cost-efficient Translation and Localization solutions for their content types and linguistic issues.

However, it is of utmost importance to note that NMT should not be perceived as a replacement for a standard human-based TEP process, but as an automated alternative to it in a limited range of fields which do not require highly-specific terminology and in-depth knowledge of cultural regulations and sensitivities.

NMT is far from outperforming a fully human-driven TEP process with all its arsenal of translation technology processes, as NMT is only capable of performing simple substitutions of words in singular source to target languages and is not endowed with the capability to ensure consistency and accuracy without rendering mistranslation and other output fallacies.

Here at AD VERBUM, we are always aiming to strengthen the translation digital synapses and guide you on your Translation and Localization journey through our in-depth knowledge and expertise in a wide range of fields, and most importantly through fully human-driven Translation and Localization services, harnessing state-of-the-art translation technology and processes.

After all, any map in pursuit of future goals is first drawn by a human hand.

Embark on your Translation and Localization journey with AD VERBUM.

References:

Google’s Neural Machine Translation System: Bridging the Gap between Human and MachineTranslation (2016), Y. Wu, M. Schuster, Z. Chen, Q.V. Le, M. Norouzi, et al.

Neural versus Phrase-Based Machine Translation Quality: a Case Study (2016) Luisa Bentivogli, Arianna Bisazza, Mauro Cettolo, Marcello Federico.

Robust Neural Machine Translation Google AI Blog article published Monday, July 29, 2019 by Yong Cheng, Software Engineer, Google Research.

Robust Neural Machine Translation with Doubly Adversarial Inputs (2019), Yong Cheng, Lu Jiang, Wolfgang Macherey.

Attention is all you need (2017) (article publiched In Advances in Neural Information Processing Systems) Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin.